Write better load tests with Python & Locust

I've been writing performance tests for a few years now, but I used to think they were more of a burden than they were worth. We'd wait until the last two weeks before shipping and finally get around to that lingering user story: Load Testing. We'd run the tests and surprisingly, usually the results looked good! The problem was I never felt like the results replicated how the live service will handle real users at high traffic. It wasn't until recently that I started writing performance test scripts as scenarios, which model real user behavior, that I gained more confidence in the usefulness of our tests.

Performance Testing

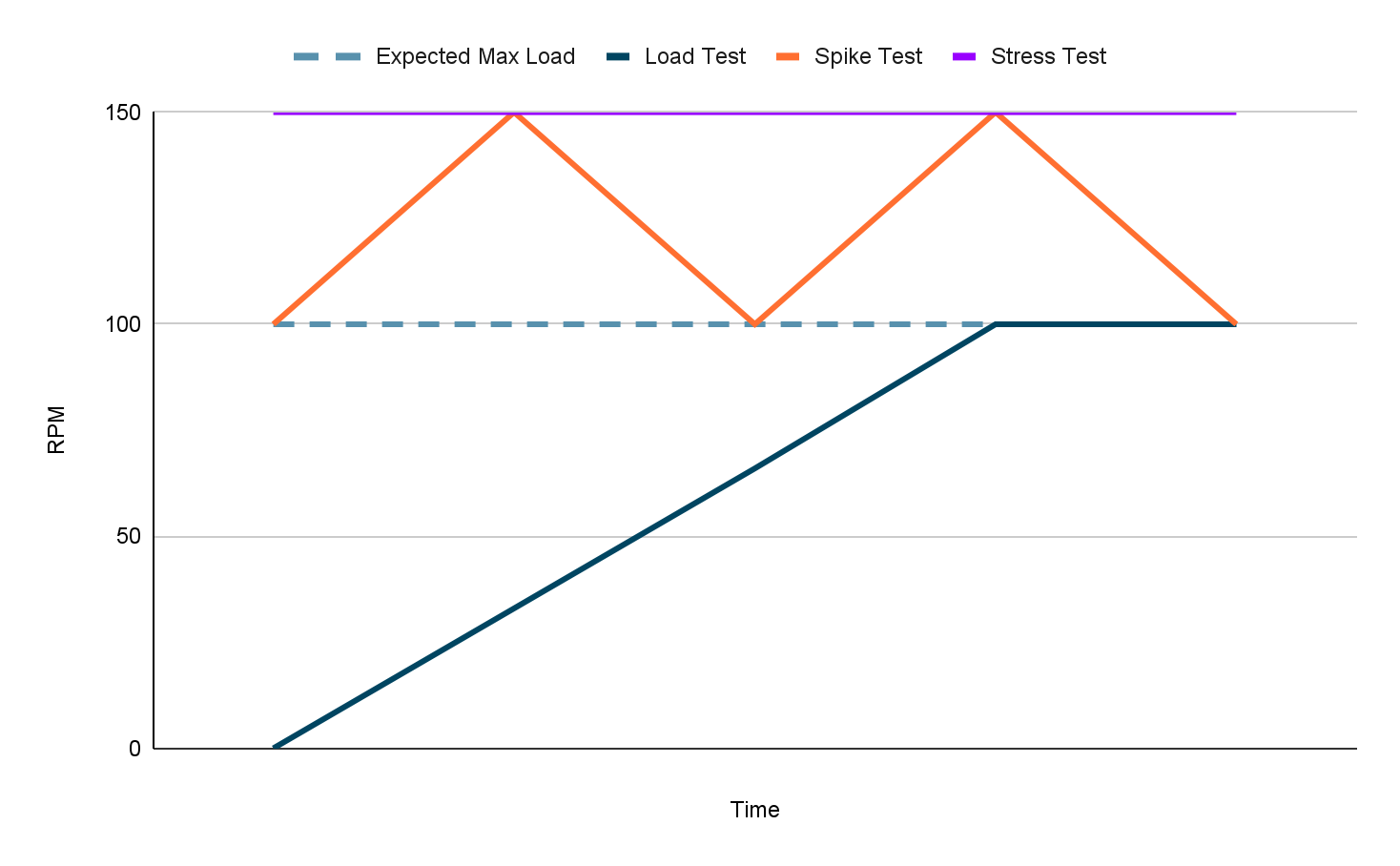

The term performance test is often used interchangeably with load test. There isn't anything overwhelmingly wrong with that, but more formally a load test is a type of performance test. The idea of scenario performance testing does not change whether you’re running a load test, a stress test, or any other form of performance test.

What I've Seen in the Past

The bulk of my experience with performance testing previously was making rapid HTTP requests to an endpoint our service exposed. Most commonly this was done before the release of a new endpoint. Someone would make an educated guess on what our peak requests-per-second (RPS) to the endpoint will be then we'd use a tool to reach that RPS.

In this example, the performance test would make the same request to the "get friends for a user" endpoint at 40 RPS.

Scenario Performance Testing

The idea of a scenario performance test is to write your performance tests in a way that models real user behavior. If your multiplayer game allows users to be friends, and you want to performance test your friend-service, what are the most common ways people interact with the service? Likely the first thing every user does is get their own friend list when they load the game. Next, they might make a friend request or accept a pending one. Your scenario performance test should capture these different paths and add delays between the steps like a real user would.

In this example, the performance test makes a variety of requests capturing the sequence of events a real user may perform. We can have the performance testing tool execute the sequence multiple times concurrently, with staggered start times, to simulate concurrent users using the service.

Complex Systems Require Complex Tests

Modern applications provide countless types of functionality and are often integrated with numerous other complex systems. The interactions users have with your application are not made in a vacuum. One user completing an achievement can affect another user checking their own achievements.

An example to highlight this point is a standard CRUD app with a relational database. A common approach to performance testing would be to test the create and read endpoints separately. What these tests fail to capture is the effect storing entries in a database has on other users reading from the database at the same time. If the table has an index, the underlying data structure (commonly a b-tree) needs to be rebalanced for every write. Read queries will experience worse performance in this scenario. Other factors include locks and resource usage. A scenario performance test will model real users creating and reading entries concurrently and provide more realistic performance results.

Determining Scenario Paths

It's impossible to write a scenario performance test without first understanding how users interact with your service. Determining user behavior is just as much a science as it is an art. A good place to start is to look at similar services at your company and use telemetry data to determine how users flow through your apps. That might not be possible and our best chance for success is to ask coworkers to use the service (during development) and record the actions they take. Some good questions to ask yourself are

- How many actions will a user take with your service in one session?

- What are the different paths a user will take?

- Are other operations executing against the service concurrently?

- How long is the delay between users' actions?

- What percentage of requests will result in client-side errors?

Defining Objectives

A performance test of any type is only useful if objectives are laid out ahead of time and results are compared against them. Previously, I would often see goals like, "Let's see if this new endpoint can handle 1k RPS." The objective was vague, didn't feel grounded in real user's behavior, and it was hard to know if the results matched the expectations.

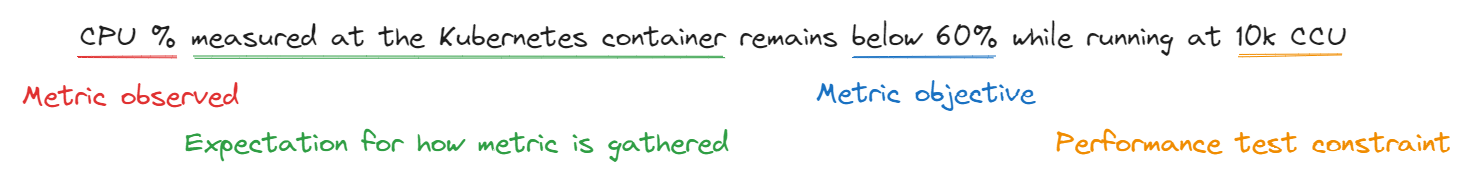

An objective should define a goal but also set boundaries. An agreed upon template for writing performance test objectives with your team will allow everyone to be on the same page and manage expectations with stakeholders. One template I have found effective is based on how Google's SRE Book defines Service Level Objectives (SLOs). We can expand upon this idea for our scenario performance tests and provide stakeholders, and ourselves, with a clear picture of how the service should behave under load.

An aside, I highly recommend Google's SRE Book which is entirely free online. It's an excellent knowledge base for all things infrastructure related.

Example With Locust

After defining your scenarios and deciding upon your performance test's objectives, it's time to write some code to execute the test. A tool I've grown to count on for writing scenario performance tests is Locust. The open source framework provides easy-to-use tooling for writing tests and executing them. One particular feature that I consider essential for performance testing is the ability to run the scripts locally as well as in a deployed environment. Locust is a Python package so having Python installed is the only prerequisite to running the tests.

# Install Python if not previously installed

$ brew install python

# Install Locust

$ pip install locust

# That's it! You're ready to write a scenario performance test

Testing a friend-service

Let's say our team is working on a friend-service for our hit new MMO. We recently introduced a search feature so player's can look up potential friends by partial username matches, then send them a friend request. Everyone agrees we should performance test such an important feature. To highlight the difference between scenario and non-scenario based performance tests, we're going to run the following tests.

1. Standard, non-scenario performance test

We'll seed the database then have the performance test make 100% of requests to the search endpoint.

2. Scenario performance test

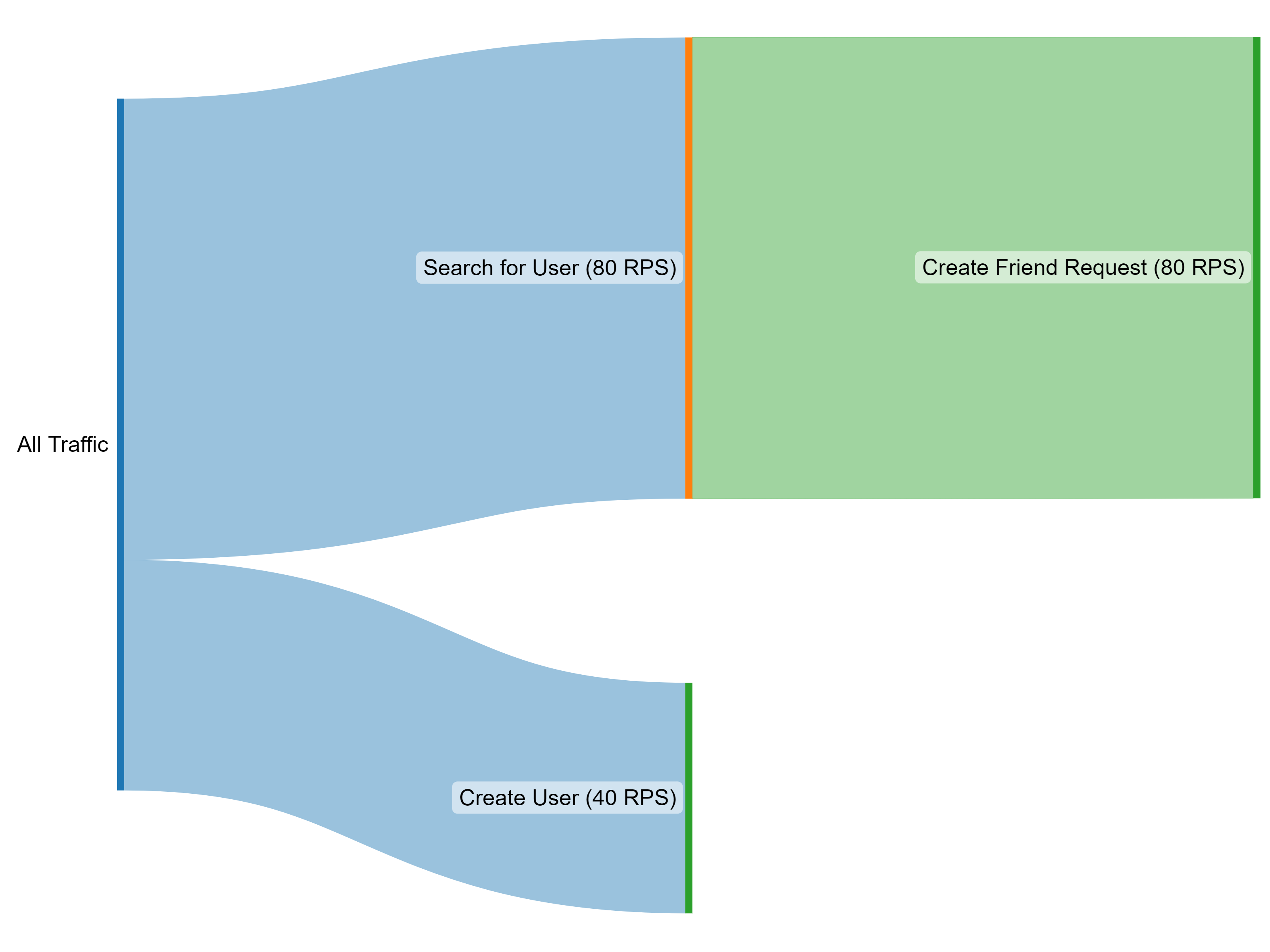

We'll seed the database then have traffic split between three endpoints: search, create user, and create friend requests.

Both tests will result in 100 RPS to the service. The scenario approach takes into consideration users making friend requests after searching and new users being created concurrently.

Locust Code

A Locust script is Python code, no magic required. Let's show what it takes to write the scenario performance test. First we'll configure a Python class as a Locust test and let Locust know we want the tasks to be run sequentially.

from random import randrange

from locust import SequentialTaskSet, between, task, HttpUser

MIN_SECONDS_TIME_BETWEEN_TASKS = 0.8

MAX_SECONDS_TIME_BETWEEN_TASKS = 1.2

SEEDED_USERS = 10000

class FriendServiceUser(HttpUser):

@task

class SequenceOfTasks(SequentialTaskSet):

# See https://docs.locust.io/en/stable/quickstart.html for more information

wait_time = between(MIN_SECONDS_TIME_BETWEEN_TASKS, MAX_SECONDS_TIME_BETWEEN_TASKS)

flow = None

# Tasks that make HTTP requests will go here

At this point, we have yet to write any tasks that make HTTP requests to the friend-service. The inner class SequenceOfTasks is where we will define those tasks. Think of an @task as one step of a user's journey through your service. The variable wait_time determines how long a user will wait between each task.

# Setup outlined above...

@task

def task_one(self):

# Determine if running the search-flow or the create-user flow

# The search-flow is twice as likely as the create-user flow

if randrange(3) == 0:

self.flow = "CREATE_USER"

create_user_request = {"username": random_string(15)}

self.client.post("/friend-service/v1/users", json=create_user_request)

else:

self.flow = "SEARCH"

self.client.get("friend-service/v1/users?username=" + random_string(4),

name="/users?username=?")

@task

def task_two(self):

if self.flow == "SEARCH":

# Make friend request from previously created user to a non-seeded user

random_seeded_user = randrange(SEEDED_USERS)

self.client.post("friend-service/v1/friends", json={"userId": random_seeded_user,

"friendId": random_seeded_user + (SEEDED_USERS * 2)})

# Else if flow == "CREATE_USER" then do nothing

We now have two tasks that each Locust user will execute in order. The first task has branching logic where 1/3 of traffic calls create user and 2/3 searches for users in the friend-service. The second task creates a friend request as long as the flow is SEARCH.

Running Locust

# Start Locust locally

$ locust -f ./performance-tests/test_scenario.py

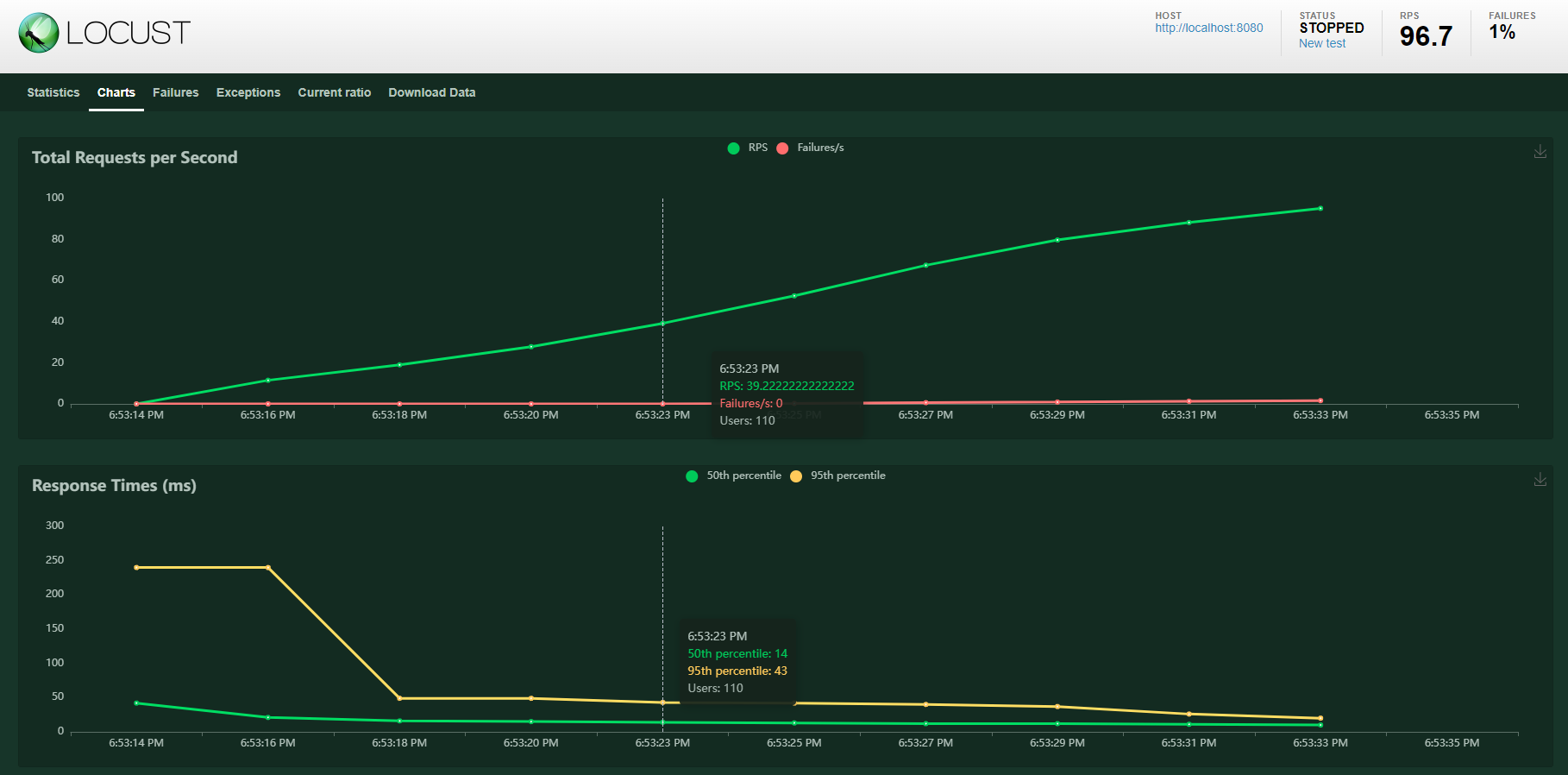

The Locust UI provides a mechanism for starting and stopping performance tests. The number of users refers to Locust users, which can be thought of as a single person running the tasks (task_one and task_two) repeatedly. We're going to run the above code for the scenario performance test, and the non-scenario based test, with enough Locust users to equal 100 total RPS to the service.

Objective

We'll define an objective for the scenario performance test and compare it to our results. A good test will have a few objectives but for brevity this is a good start.

- p50 latency for the search endpoint, as measured from the service, is less than 20ms while running the scenario performance test at 100 RPS for 30 minutes

Results

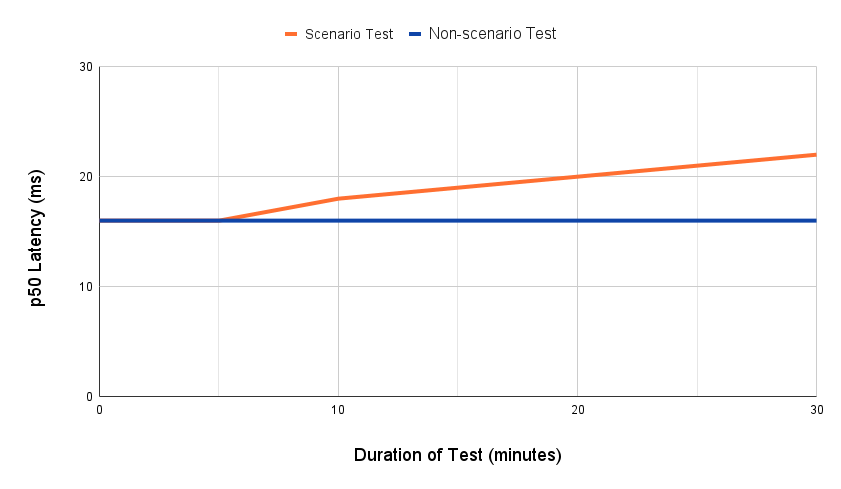

Both performance test (scenario and non-scenario) ran for 30 minutes and every 5 minutes a latency reading for the search endpoint was recorded.

We can learn a lot from these results! Each test ran for an equal amount of time but the search endpoint's latency behaves very differently.

Takeaways

- Latency increases as time increases during our scenario performance test

- 16 ms to 22 ms (38% increase) after 30 minutes

- Latency remained constant during our non-scenario test

- Consistently 16 ms

It might seem obvious, our search endpoint slows as the number of entries to search increases, but having data to prove one's hypothesis is essential. If we didn't run a scenario performance test but solely relied on the non-scenario test, we might think the search endpoint met our objective. The scenario performance test showed we did not meet our objective and additional optimization may be needed before the endpoint launches.

Wrapping Up

Performance testing is essential to writing enterprise applications that will scale to millions of users. Scenario performance tests take your performance testing to the next level by recreating live traffic and giving you data that will accurately reflect the real world. It’s important to call out that this should not be the only way you run performance tests. Running tests against single endpoints shouldn’t be entirely disregarded as long as the results are put in context. A wide array of different performance tests will give your team the best information for how your app runs and provide your customers with the absolute best experience possible.

For more information on performance testing platform services, check out Pragma's excellent article series: The Pragma Standard: Load Testing a Backend for Launch.